Matterport & Ricoh Theta Z1 work well together?13430

Pages:

1

|

jpierce360 private msg quote post Address this user | |

| My company is looking to purchase a Ricoh Theta Z1 to work with the matterport software. I have been reading the reviews and I feel this is the right direction for my company to do exterior virtual tours. I have a few questions that I hope can be answered in this forum. How does it work with the Matterport Capture application. - Does the Z1 download directly to the Capture application? - How does the Z1 shoot? Is it a complete 360 view and I need to hide when scanning? Or does it spin like the Pro 2 camera and I can move around? I have been reading that some user suffers from multiple alignments and then others report no problem when using the Z1. - What has been everyone experience? - Are the alignments more from the Matterport capture application rather than the Z1 camera? |

||

| Post 1 • IP flag post | ||

WGAN Fan WGAN Fan Club Member Portland, Oregon |

HelloPado private msg quote post Address this user | |

| Z1 works seamlessly with the capture app! 360, you will need to hide. The range from the ipad to the Z1 is pretty far. I've very rarely lost connection. The capture with the Z1 takes between 5-10 seconds. It's awesome. Alignment between the 2 can be tricky so be sure to overlap the interior with the Z1 and the exterior with matterport. p.s. be VERY careful with the Z1 outside. Mine blew over in a slight breeze and it's no bueno. $450 to repair. Check this forum, there are great ideas on how to best weigh down the tripod. Best of luck. I highly recommend the combination of these 2 cameras! John |

||

| Post 2 • IP flag post | ||

Hartland, Wisconsin |

htimsabbub23 private msg quote post Address this user | |

| I just added the Z1 to my arsenal of cameras. Overall I'm very happy with it and it has decreased my time by about 30 to 40%. The twos thing I noticed is that in a room that is is yellow toned or has yellow lights it magnifies them to almost an unacceptable yellow cast. I actually still carry my matterport pro with me in the cases I feel it's too yellow. Also the battery life is very short to the point you may only be able to finish one house. My solution was to attach a battery pack to the tripod and run a cord to the camera. In order to do this you also need a little tripod extension otherwise the tripod will be in the way of the USB C plug. | ||

| Post 3 • IP flag post | ||

WGAN Forum WGAN ForumFounder & WGAN-TV Podcast Host Atlanta, Georgia |

DanSmigrod private msg quote post Address this user | |

Quote:Originally Posted by HelloPado @HelloPado Ugh! We've all been there! ✓ 9 Tripods/Monopods to Consider for Your 360º 1-Click Camera Dan |

||

| Post 4 • IP flag post | ||

WGAN Fan WGAN FanCLUB Member Coeur d'Alene, Idaho |

lilnitsch private msg quote post Address this user | |

| @jpierce360 I swap back and forth between the Z1 & Pro2 when connecting structures ~ Depending on the distance and lighting conditions |

||

| Post 5 • IP flag post | ||

WGAN WGANStandard Member Los Angeles |

Home3D private msg quote post Address this user | |

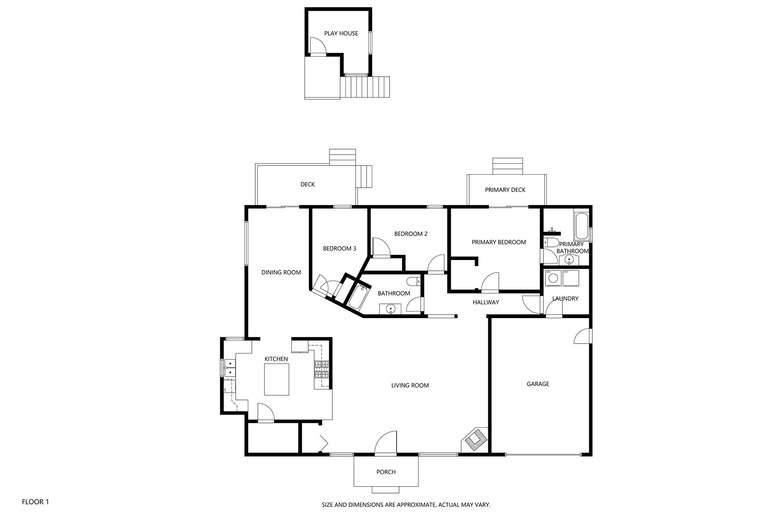

| I use the Pro camera for everything possible, including exteriors, which means scanning outside only during heavy cloud cover or before dawn, after sunset (as fast as you can, about 30-40 minutes usable). The Pro camera aligns pretty dependably as it has depth data, though it won't work when you've ventured 3-4 meters beyond geometric objects. The IR generated mesh is way to coarse to understand leaves, trees and bushes. But there's a lot you can do in smaller yards, and sometimes adding geometric physical objects has gotten me through tough spots. Where I pull out the Z1 is when there is too much foliage, and especially for pools, as the Pro camera's IR cannot read water. In dollhouse views, a pool becomes a black hole. Problem is in getting the Z1 photos (converted to 3D via Cortex) to align with the good Pro scans. Best technique is to begin your first Z1 right on top of a previous Pro scan, to maximize the Cortex-simulated 3D data (AI guesswork) to hopefully find the same spot. There can be a lot of trial and error. False alignment, delete and try again. Very frustrating and time consuming. When doing this, it is ESSENTIAL that you pay attention to the mini-map as each Z1 scan is aligned and appears. Many will be in the wrong place and must be immediately deleted lest you multiply errors by adding additional scans. The sad part is this... There is a solution that Matterport could, but seemingly chooses not to, deliver. In the Capture app, as each new Cortex scan is added to the minimap, the added ground area appears. If an outdoor scan by the pool shows up in the living room (let's call is scan #85), you suddenly see grass and the pool edge superimposed right by the couch, in a patch surrounding the 85 marker. Obvious error, and all you can do is delete and try again. But imagine if you could Tap the 85 marker, and in addition to the option to delete it, there was an open to MOVE the scan, just as we can do with 360's used outside. So tap MOVE, and the new scan can be rotated and dragged across the model to the proper spot, in this case outside by the pool, and then tapped again to PLACE it, correcting the error. At that point the ground image created by 85 could be visually checked for alignment with the previous Pro camera scans, and, behind the scenes, both the visual and the Cortex-created AI-simulated depth data would be locked in this new, proper, position. If this were added to the Matterport system, clearly doable as 360's can be moved at will, creation of MP spaces using non-depth-scanning cameras would be incredibly more powerful. You could "scan" to your heart's content in gardens, yards, anywhere. The problem is one of will, and the way MP looks at MSPs, or should I use their later term, "scan technicians". Every decision of the company seems to be made to limit, to restrict, what Matterport creators can do. They want a system that functions like Uber. When you "uber" a car, you don't care who's driving as long as the car has gas and four wheels. This is how Matterport wants their system, and us, to function. That the skill level of every operator is the same, and is "good enough". They don't want a system where the skill level of the MSP matters, because then clients will NOT see us as all the same, interchangeable, which enables driving the labor price through the floor, just as Uber drivers struggle with. But Matterport isn't fool-proof, and all MSPs are not the same. I don't have all the answers in scanning with MP, but I know I'm in the top 5% based on the scale and complexity of projects I've done (from 800 to 70,000 in a single model), and my clients know my models come out as perfect as the technology permits. It's this striving for perfection that is to the benefit of MP, but nevertheless, they've chosen to restrict capabilities that would empower those of us who care, to push the platform forward. Small example: GeoCV early on provided users with the ability to download a pano, retouch it in photoshop and re-load it. So it's a snap to fix little problems such as dog poop on the patio. The process is simple, just like you can download 360 panos in Matterport. They just won't let you re-upload them, because this level of MSP commitment would differentiate between creators. It's not "scalable" because humans are different. Amir Frank, I would love your input on this conversation. On the web call two weeks ago someone asked about scanning outdoors. Your reply on screen was that MP only encourages the use of 360 (bubbles). But what about those of us who are committed to providing high-end customers with more than just 360 bubble images? It can be done. I attach a recent example I did all with Pro camera and added water to the dollhouse pool with the Z1. Help us do even better by empowering us with more control to make MP models even better... like repositioning mis-aligned scans. Full property online presentation: 4541 Comber Ave, Encino, CA |

||

| Post 6 • IP flag post | ||

|

jpierce360 private msg quote post Address this user | |

| @home3d - So the Z1 uses cortex to convert the 360 picture to the capture application? Similar to use the Pro 2 as a 360 Scan? correct? | ||

| Post 7 • IP flag post | ||

WGAN Fan WGAN FanCLUB Member Coeur d'Alene, Idaho |

lilnitsch private msg quote post Address this user | |

| @jpierce360 Correct, however it is done automatically within the capture app with any of the supported 360 cameras. With the Pro1/2 you have to place then convert the 360 views. I have found that alignment when converting Pro1/2 360 views with cortex can be kinda hit and miss |

||

| Post 8 • IP flag post | ||

|

jpierce360 private msg quote post Address this user | |

| Thanks for the help everyone. Everyone taking the time to answer a few questions really helps me feel better about purchasing the camera!! | ||

| Post 9 • IP flag post | ||

WGAN Fan WGAN FanCLUB Member Coeur d'Alene, Idaho |

lilnitsch private msg quote post Address this user | |

| @jpierce360 My main use for the Z1's is Zillow 3D home tours https://www.zillow.com/view-3d-home/742d32f6-e178-4029-84a9-2a4bae40a002?setAttribution=mls  |

||

| Post 10 • IP flag post | ||

WGAN WGANStandard Member Los Angeles |

Home3D private msg quote post Address this user | |

| @jpierce360 - no, completely different, Z1 vs a Pro 1/2. The Pro cameras us IR to actually gather precise measurements on every physical surface within its range of about 10-12 feet. The Z1 captures no depth data at all. Cortex takes the Z1's 360° photo and uses AI to 'guess' (nothing more than guess) where in space the objects it photographs actually are. It then creates 3D mesh based on these guesses. If you're shooting an unfurnished condo, it does a pretty good job. After all, virtually every doorway in the U.S. is the same size and walls are, most often, set at 90° angles to one another. So Cortex figures out a pretty good guess. But get into complex spaces with odd-shaped objects, plants, gardens, and this can go terribly wrong. 3D modeling requires 3D spatial data and there will never be a replacement for gathering this data. IR has worked in the Pro cameras. Lidar (rumored Pro 3 next year) will be better because it works in sunlight as well as indoors. Anyone who wants to do quality MP work should own one of the Pro cameras. The other 360 cameras serve only very simple spaces, for playing around with MP (dip your toe in the water) and for specialized visual purposes like showing water in a pool. |

||

| Post 11 • IP flag post | ||

Pages:

1This topic is archived. Start new topic?